SB Intuitions, Building Unique Japanese LLM

Released Japanese LLM with 7 Billion, 13 Billion, and 65 Billion Parameters to Contribute to R&D in Academia and Industry

June 14, 2024

SB Intuitions Corp.

SB Intuitions Corp. (Headquarters: Minato-ku, Tokyo; President & CEO: Hironobu Tamba; “SB Intuitions”) announces the release of Japanese large language models (LLMs) with 7 billion, 13 billion, and 65 billion parameters. SB Intuitions is working to develop a 390 billion-parameter LLM by the end of fiscal year 2024.

Released model

- Sarashina1-7B

- Sarashina2-7B

- Sarashina1-13B

- Sarashina2-13B

- Sarashina1-65B

- Sarashina2-70B※

Sarashina2 is an enhanced model based on knowledge gleaned through the development of Sarashina1. While the results of performance evaluations are shown below, it shows significant performance improvements from Sarashina1. Sarashina1 and Sarashina2, which have differing conditions, have been released simultaneously to promote research and development of LLM through various academic and industrial analyses. We plan to outline the differing conditions later on the SB Intuitions tech blog(https://www.sbintuitions.co.jp/blog/)(currently in Japanese only).

Note: The released model is a pre-trained model with no instruction tuning.

Because it has not been tuned to follow human instructions, we do not envision providing it as a functional service as is.

URL for released model

https://huggingface.co/sbintuitions

* Sarashina2-70B released on August 7, 2024. (Note added August 7, 2024)

Results of performance evaluations

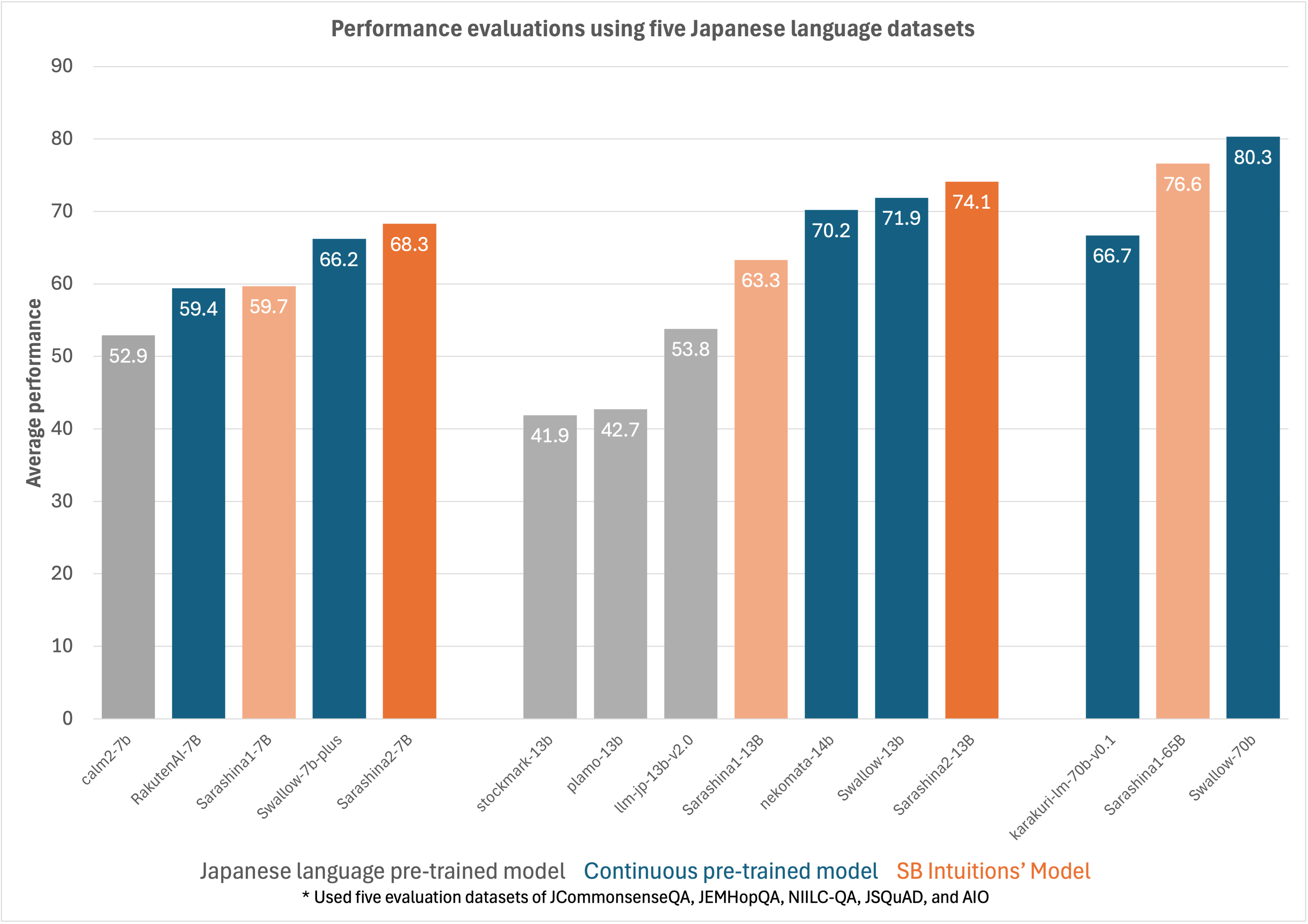

Performance evaluations using five benchmark Japanese language datasets (Note 1) reveal that compared with models with a similar number of parameters, Sarashina2 even outperforms pre-trained Japanese models trained on full stack as well as continuous learning pre-trained models (Note 2), which have shown high performance. Training of Sarashina2-70B is continuing at present.

(Note 1): Average calculated from the five evaluation datasets for JCommonsenseQA (multiple choice question & answers, [Kurihara, et al., 2022]), JEMHopQA (open-ended question and answers, [Ishii et al., 2023]), NILC-QA (open-ended question and answers, [Sekine, 2003]), JSQuAD (machine reading comprehension, [Kurihara et al., 2022]), and AIO (open-ended question and answers, [Suzuki et al., 2020]).

(Note 2): Refers to pre-trained models in continuous learning based on existing pre-trained models. Among pre-trained models that have learned multiple languages, there are a number of models undergoing continuous learning with Japanese texts, with reported relatively high performance.

Origins of “Sarashina” model name

SB Intuitions seeks to create a multimodal foundation model with the highest quality of Japanese, including a language model that specializes in Japanese. The name “Sarashina” originates from the “Sarashina Diary,” which makes the first ever reference to the Takeshiba area, the location of the company's' headquarters, and embodies the company's ambition to create globally utilized models from Japan.

At SB Intuitions, we will continue to advance even larger LLMs and promote research and development for the social implementation of LLMs.